What is MLOps?

Ever liked something on Instagram and then, almost immediately, had related content in your feed? Or search for something on Google and then be spammed with ads for that exact thing moments later? These are symptoms of an increasingly automated world. Behind the scenes, they are the result of state-of-the-art MLOps pipelines. We take a look at MLOps and what it takes to deploy machine learning models effectively.

The attention mechanism is an influential idea in deep learning. Even though we often think about attention as the one implemented in transformers, the original idea came from the paper “Neural Machine Translation by Jointly Learning to Align and Translate” by Dzmitry Bahdanau et. al. Understanding the origin of the powerful attention technique will help us grasp many offspring ideas. In this article, I will describe this original attention idea. In future articles, I will cover other attentions, like the one from the Transformer model, and more recent ones not only on text, but also on images, videos and audios.

Top AI and Data Science Tools and Techniques for 2022 and Beyond

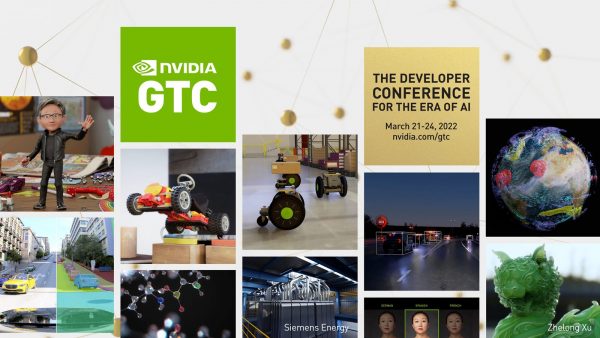

NVIDIA GTC is a free, virtual event taking place March 21–24. Data scientists will share their top tools and techniques to optimize data pipelines. You’ll learn how to accelerate your data science pipeline from data preprocessing and engineering to machine learning on GPUs, using RAPIDS open-source software. At the event, world-class Kaggle Grandmasters, top data professionals, and influencers across several industries are gathering to inspire you.

Geo-visualization of daily movements of Bike-sharing system: Helsinki and Tartu

Recently, I have been starting projects related to the geo-visualization of massive movement datasets at GIS4 Wildlife. I just remembered that during the 30DayMapChallenge one of my colleagues, Elias Wilberg created a wonderful visualization about the daily movements of the Helsinki…

Multi-Head Attention

The Transformer network architecture (paper) uses stacked attention layers instead of recurrent or convolutional ones. We will only deal with a small Transformer’s component, the Multi-Head Attention module. The first application of Transformer model was language translation. We refer to this PyTorch implementation using the praised Einops library.

Understanding Sigmoid, Logistic, Softmax Functions, and Cross-Entropy Loss (Log Loss)

The most common sigmoid function used in machine learning is Logistic Function, as the formula below. The formula is simple, but it is quite useful because it offers us some nice properties: It maps the feature space into a probability function. The most important factor is the activation function at the output layer with Binary Cross-Entropy Loss for binary classifications, or add a (log) softmax function with Negative Log-Likelihood Loss.

How to Generate Synthetic Tabular Dataset

Synthetic data is used in the healthcare sector, self-driving cars, financial sectors, maintaining a high level of privacy, and for research purposes – Towards Data Science. Researchers and data scientists are using synthetic data to build new products, improve the performance of machine learning models, replace sensitive data, and save costs in acquiring the data. To produce synthetic tabular data, we will use conditional generative adversarial networks from open-source Python libraries called CTGAN and Synthetic Data Vault.

Neuro-symbolic AI brings us closer to machines with common sense

The idea of neuro-symbolic systems is being explored to overcome barriers to general-purpose AI systems. Joshua Tenenbaum, professor of computational cognitive science at the Massachusetts Institute of Technology, spoke at the IBM Neuro-Symbolic AI Workshop. He says the idea is to go beyond the idea of intelligence as recognizing patterns in data and approximating functions and more toward the things the human mind does.

Level Up Your MLOps Journey with Kedro

Kedro is an open-source Python project that aims to help ML practitioners create modular, maintainable, and reproducible pipelines. There are a dozen tools and frameworks out there to support MLOp and MLOp is taking off. As a rapidly evolving project, it has many challenges, such as fixing server cables, engineering pipelines, outbound integrations, and monitoring code.

Feature Stores for Real-time AI & Machine Learning

The most important characteristic of a feature store for real-time AI/ML is the feature serving speed from the online store to the ML model for online predictions or scoring. Successful feature stores can meet stringent latency requirements (measured in milliseconds) and at scale (up to 100Ks of queries per second) while maintaining a low total cost of ownership and high accuracy. The choice of online feature store as well as the architecture of the feature store play important roles in determining how performant and cost effective it is.

From Google Colab to a Ploomber Pipeline: ML at Scale with GPUs

Google Colab is pretty straightforward, you can open a notebook in a managed Jupyter environment, train with free GPUs, and share the drive notebooks. It also has a Git interface to some extent (mirroring the notebook into a repository). I wanted to scale my notebook from the exploration data analysis (EDA) stage to a working pipeline that can run in production.

A Quick-Reference Checklist for A/B Testing

The checklist isn’t meant to be a comprehensive guide to A/B testing. Instead, you can bookmark the checklist and reference it when required. Decide if you will test more than one variant or if you’ve never run a test before. Determine the sample size for each group (power analysis) Calculate how long your experiment will run for and how long it will run.

Data-centric AI: Practical implications with the SMART Pipeline

The latest trend in the ML community is the rise of what is termed data-centric AI. The most relevant component of an AI system is the data that it was trained on rather than the model or sets of models that it uses. In this article we will go over a few of the practical steps for how to properly think about and implement data-centered AI.