Yann LeCun on a vision to make AI systems learn and reason like animals and humans

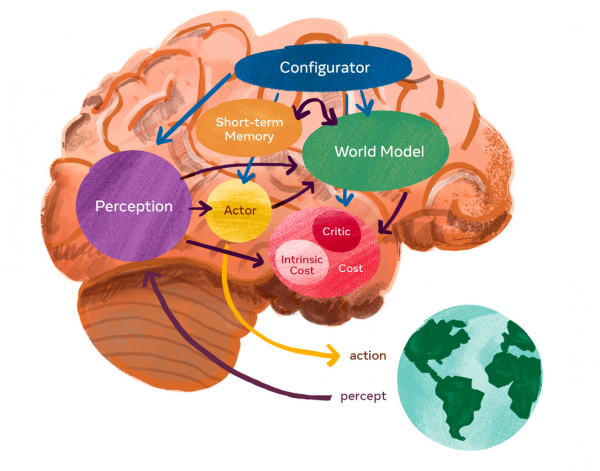

Meta AI’s Chief AI Scientist Yann LeCun is sketching an alternate vision for building human-level AI. He proposes that the ability to learn “world models” — internal models of how the world works — may be the key. The best autonomous driving systems today need millions of pieces of labeled training data and millions of reinforcement learning trials in virtual environments.

Machine learning can help read the language of life

For a third of all proteins that all organisms produce, we just don’t know what they do. DeepMind showed that AlphaFold can predict the shape of protein machinery with unprecedented accuracy. The shape of a protein provides very strong clues as to how the protein machinery can be used, but doesn’t completely solve this question. We describe how neural networks can reliably reveal the function of this ‘dark matter” of the protein universe, outperforming state-of-the-art methods.

Can CPUs leverage sparsity?

In the human neocortex, neuron interconnections are incredibly sparse, with each neuron only connecting to a small fraction of the cortex’s total neurons. We can apply these neuroscience insights to deep learning and create ‘sparse’ neural networks. Incredibly, it is possible to create sparse networks with identical accuracy to a comparable dense network, but in which neurons are connected to as few as 5% of neurons in the previous layer and neuron activations are limited to less than 10%.

Geometric deep learning on molecular representations

Geometric deep learning (GDL) is based on neural network architectures that incorporate and process symmetry information. GDL bears promise for molecular modelling applications that rely on molecular representations with different symmetry properties and levels of abstraction. This Review provides a structured and harmonized overview of molecular GDL.

Amazon at WSDM: The future of graph neural networks

George Karypis, a senior principal scientist at Amazon Web Services, is one of the keynote speakers at this year’s Conference on Web Search and Data Mining (WSDM) His topic will be graph neural networks, his chief area of research at Amazon. Graph neural networks (GNNs) represent information contained in graphs as vectors so other machine learning models can make use of that information.

What’s next for deep learning?

The Association for the Advancement of Artificial Intelligence (AAAI) had its first meeting in 1980. Two of its first presidents were John McCarthy and Marvin Minsky, both participants in the 1956 Dartmouth Summer Research Project on Artificial Intelligence, which launched AI as an independent field of study. Many people date the deep-learning revolution to 2012, when Alex Krizhevsky’s deep network AlexNet won the ImageNet object recognition challenge with a 40% lower error rate than the second-place finisher.

A deep generative model for molecule optimization via one fragment modification

An invertible flow model for generating molecular graphs. Protecting against evaluation overfitting in empirical reinforcement learning. An implicit generative model for small molecular graphs. A knowledge-based approach in designing combinatorial or medicinal chemistry libraries for drug discovery. A. Ghose, A. K., Viswanadhan, V. N. & Wendoloski, J. J.

A case-based interpretable deep learning model for classification of mass lesions in digital mammography

• None Park, H. J. et al. A computer-aided diagnosis system using artificial intelligence for the diagnosis and characterization of breast masses on ultrasound: added value for the inexperienced breast radiologist. Medicine 98, e14146 (2019).

An explorer in the sprawling universe of possible chemical combinations

Heather Kulik is working on a catalyst for the direct conversion of methane gas to liquid methanol at the site where it is extracted from the Earth. Her lab is mapping out how chemical structures relate to chemical properties, in order to create new materials tailored to particular applications. Kulik was mathematically precocious and did arithmetic as a toddler and college-level classes in middle school.

A Tale of Two Sparsities: Activation and Weight

Machine learning has never successfully harnessed sparse activations combined with sparse weights. Complementary Sparsity combines sparse convolution kernels to form a single dense structure. Together, the two types of sparsity could increase efficiency and drive down the computational cost of neural networks by two orders of magnitude, requiring fewer resources than dense approaches. Numenta has introduced a novel architecture solution for sparse-sparse networks on existing hardware.

Using hyperboloids to improve product retrieval

Researchers at Amazon and elsewhere have been investigating the idea of hyperbolic embedding, or embedding data as higher-dimensional analogues of rectangles on a curved surface. This has numerous advantages, one of which is the ability to capture hierarchical relationships between data points. In experiments, we compared this approach to nine different methods that use vector embeddings and one that embeds data as rectangular boxes in Euclidean space.

Physics-based machine learning for subcellular segmentation in living cells

Machine learning is proving vital for cell-scale optical microscopy image and video analysis for studies in the life sciences. However, artificial intelligence and machine learning solutions are lacking for the analysis of small and dynamic subcellular structures such as mitochondria and vesicles. This affects how sub cellular mechanisms are studied in life-science studies. Computer-vision (CV) centric approach holds an untapped potential for gaining unprecedented insights.

Startup Taps Finance Micromodels for Data Annotation Automation

Ulrik Hansen and Eric Landau founded Encord to adapt micromodels typical in finance to automated data annotation. Micromodel neural networks are neural networks that require less time to deploy because they’re trained on less data and used for specific tasks. Encord’s NVIDIA GPU-driven service promises to automate as much as 99 percent of businesses’ manual data labeling.

Can machine-learning models overcome biased datasets?

MIT researchers studied how training data affects how artificial neural networks learn to recognize objects it has not seen before. A neural network is a machine-learning model that mimics the human brain in the way it contains layers of interconnected nodes, or “neurons,” that process data. They say diversity in training data has a major influence on whether a neural network can overcome bias.

AI-generated characters for supporting personalized learning and well-being

The idea of computers generating content has been around since the 1950s. Some of the earliest attempts were focused on replicating human creativity by having computers generate visual art and music. It has taken decades and major leaps in artificial intelligence (AI) for generated content to reach a high level of realism. Recent leaps in generative models include generative adversarial networks (GANs) GANs have enabled the hyper-realistic synthesis of digital content.

Comparing Hinton’s GLOM Model to Numenta’s Thousand Brains Theory

Geoffrey Hinton recently published a paper “How to Represent Part-Whole Hierarchies in a Neural Network” and presented a new theory called GLOM. The Thousand Brains Theory is a sensorimotor theory that models the common circuit in the neocortex and suggests a new way of thinking about how our brain works. We learn a model of the world by observing how our sensory inputs change as we move.

Combining views for newly sequenced organisms

Fewer than 1% of available sequences are reliably annotated. Functional characterisation of newly sequenced proteins increasingly relies on annotation transfer from well-studied homologous proteins in other organisms. For example, proteins encoded by genes that are co-expressed or proteins that interact to form a single complex are likely to share molecular functions. Transfer of functional annotated functional network relationships provides a distinct perspective for studying protein functions of a newly sequenced organism.

Economic Impacts Research at OpenAI

OpenAI is launching a call for expressions of interest from researchers interested in evaluating the economic impact of Codex—our AI system that translates natural language to code. OpenAI recognizes that our decisions around AI system design and deployment can influence economic impacts. Despite remarkable technological progress over the past several decades, gains in economic prosperity have not been widely distributed. In the US, trends in both income and wealth inequality over the last forty years demonstrate a worrying pace of economic divergence.

Fast AND Accurate Sparse Networks

Deep neural networks (DNNs) have become the models of choice for an ever-increasing range of application spaces. DNNs have delivered State-of-the-Art results in every space into which they are deployed. These DNN models are often incredibly complex, and it takes significant computational resources to both train and deploy these models. The resulting costs are real; both in terms of cloud costs and the cost to the planet from the associated energy consumption.

SEER 10B: Better, fairer computer vision through self-supervised learning on diverse datasets

Meta AI Research’s new self-supervised computer vision model can learn from any random collection of images on the internet without the need for careful data curation and labeling that goes into conventional computer vision training. SEER is now not only much more powerful, it also produces fairer, more robust computer vision models and can discover salient information in images, similar to how humans learn about the world by considering the relationships between the different objects they observe.