A First-Principles Theory of Neural Network Generalization

Many empirical phenomena, well-known to deep learning practitioners, remain mysteries to theoreticians. Perhaps the greatest of these mysteries has been the question of generalization: why do the functions learned by neural networks generalize so well to unseen data? In our recent paper, we derive a first-principles theory that allows one to make accurate predictions of neural network generalization (at least in certain settings)

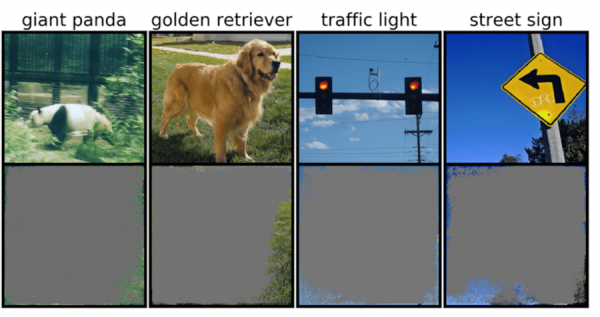

How well do explanation methods for machine-learning models work?

MIT researchers modify data so they can be certain which features are important to the neural network. They find that even the most popular methods often miss the important features in an image. This could have major implications, especially if neural networks are applied in high-stakes situations like medical diagnoses. If the network isn’t working properly, human experts may have no idea they are misled by the faulty model.

Amazon pushes the boundaries of extreme multilabel classification

In the past few years, we’ve published a number of papers about extreme multilabel classification (XMC), or classifying input data when the number of candidate labels is huge. With label partitioning, labels are first grouped into clusters, and a matcher model is trained to assign inputs to clusters. We demonstrate a method for assigning labels to multiple clusters that improves classification accuracy with a negligible effect on efficiency.

Solving (Some) Formal Math Olympiad Problems

We achieved a new state-of-the-art (41.2% vs 29.3%) on the miniF2F benchmark, a challenging collection of high-school olympiad problems. Our approach consists of manually collecting a set of statements of varying difficulty levels (without proof) where the hardest statements are similar to the benchmark we target. Initially our neural prover is weak and can only prove a few of them. We iteratively search for new proofs and re-train our neural network on the newly discovered proofs, and after 8 iterations, our prover ends up being vastly superior.

The Challenge Of Interpreting Language in NLP

Why teaching machines to understand language is such a difficult undertaking. Enabling our machines to understand text has been a daunting task. Although a lot of progress has been made on this front, we are still a long way from creating an approach towards seamlessly converting our language into readable data for our machines.

“Ambient intelligence” will accelerate advances in general AI

As the world has become more connected, and computing has permeated our surroundings, a new AI paradigm is emerging. In this paradigm, our environment responds to our requests and anticipates our needs, provides information or suggests actions. This vision of ambient intelligence is not that different from the one on Star Trek. For most of the last decade, the focus has been reactive assistance — for example, ensuring that customer-initiated requests to Alexa meet customers’ expectations.

Reward Isn’t Free: Supervising Robot Learning with Language and Video from the Web

Building the quintessential home robot that can perform a range of interactive tasks, from cooking to cleaning, in novel environments has remained elusive. While a number of hardware and software challenges remain, a necessary component is robots that can generalize their prior knowledge to new environments, tasks, and objects in a zero or few shot manner. This work was conducted as part of SAIL and CRFM.

The rise and fall (and rise) of datasets

Machine learning community has identified an alarming number of potential legal and ethical problems with popular image datasets. These typically contain images scraped from the internet, in particular from sharing platforms such as Flickr. This often happens without the explicit permission from, or even awareness of, the people who generated the data. Training machine learning algorithms on copyrighted data is generally considered ‘fair use’ on the basis that it amounts to transformative use of the original data.

On-device speech processing makes Alexa faster, lower-bandwidth

On-device speech processing has multiple benefits: a reduction in latency and bandwidth consumption. Amazon is launching a new setting that gives customers the option of having the audio of their Alexa voice requests processed locally, without being sent to the cloud. In the cloud, storage space and computational capacity are effectively unconstrained. Executing the same functions on-device means compressing our models into less than 1% as much space — with minimal loss in accuracy.

BanditPAM: Almost Linear-Time k-medoids Clustering via Multi-Armed Bandits

The state-of-the-art \(k\)-medoids algorithm from NeurIPS, BanditPAM, is now publicly available. It’s written in C++ for speed and supports parallelization and intelligent caching, at no extra complexity to end users. The solution is often different from the best known algorithm for the \(k-means problem.

Catching up on Numenta: Highlights of 2021

In the neocortex, the activity of neurons is sparse, meaning only a small fraction of the brain’s neurons are active at any given moment and individual neurons are only interconnected with a small part of the total neurons in the brain. In 2021, we made significant progress in introducing these two key aspects of brain sparsity into machine learning algorithms and existing hardware systems. Ultimately, we think sparsity provides a clear path to how we can scale AI systems.

Interpreting neural networks for biological sequences by learning stochastic masks

Scrambler network architecture is based on groups of residual blocks. Each residual block consists of 2 dilated convolutions, each preceded by batch normalization and ReLU activations. A softplus activation is applied to the final tensor in order to get importance scores that are strictly larger than 0. (a) Comparison of attribution methods on the ‘Inclusion’-benchmark of Fig. 2b.

Neurons learn by predicting future activity

The contrastive Hebbian learning algorithm requires a network to converge to steady-state equilibrium in two separate learning phases. Here we propose to solve this problem by combining both activity phases into one, inspired by sensory processing in the cortex. For example, in visual areas, there is initially bottom-up-driven activity containing mostly visual attributes of the stimulus (for example, contours) This is then followed by top-down modulation containing more abstract information.

Hierarchical representations improve image retrieval

Image matching usually works by mapping images to a representational space (an embedding space) and finding images whose mappings are nearby. In experiments that compared our approach to nine predecessors on five different datasets, we find that our approach delivers the best results a large majority of the time. In our paper, we show how to leverage such hierarchies when building image retrieval systems or, if no hierarchies exist, how to construct them.

Using NLU labels to improve an ASR rescoring model

When someone speaks to a voice agent, an automatic speech recognition (ASR) model converts the speech to text. A natural-language-understanding (NLU) model interprets the text, giving the agent structured data that it can act on. The idea is that adding NLU tasks, for which labeled training data are generally available, can help the language model ingest more knowledge, which will aid in recognition of rare words.

Nonsense can make sense to machine-learning models

MIT scientists identify ‘overinterpretation’ where algorithms make confident predictions based on details that don’t make sense to humans. Models trained on popular datasets like CIFAR-10 and ImageNet suffered from overinterpretation. This could be particularly worrisome for high-stakes environments, like split-second decisions for self-driving cars and medical diagnostics for diseases that need more immediate attention.

Computing for ocean environments

Wim van Rees, the ABS Career Development Professor at MIT, is developing and using numerical simulation approaches to explore the design space for underwater devices that have an increase in degrees of freedom, for instance due to fish-like, deformable fins. Thanks to advances in additive manufacturing, optimization techniques, and machine learning, we are closer than ever to replicating flexible and morphing fish fins for use in underwater robotics.

RECON: Learning to Explore the Real World with a Ground Robot

A robot is tasked with navigating to a goal location, specified by an image taken at \(G\) Our method uses an offline dataset of trajectories, over 40 hours of interactions in the real-world, to learn navigational affordances and builds a compressed data-driven system. We tackle these problems by teaching the robot to explore using only real world data. The robot needs to generalize to unseen neighborhoods and recognize visual and dynamical similarities across scenes.

WACV: Transformers for video and contrastive learning

The Transformer is a neural-network architecture that uses attention mechanisms to improve performance on machine learning tasks. When processing part of a stream of input data, the Transformer attends to data from other parts of the stream, which influences its handling of the data at hand. Transformers have enabled state-of-the-art performance on natural-language-processing tasks because of their ability to model long-range correlations.

Which Mutual Information Representation Learning Objectives are Sufficient for Control?

Processing raw sensory inputs is crucial for applying deep RL algorithms to real-world problems. For example, autonomous vehicles must make decisions about how to drive safely. Direct “end-to-end” RL that maps sensor data to actions can be very difficult because the inputs are high-dimensional, noisy, and contain redundant information. Contrastive learning methods have proven effective on RL benchmarks such as Atari and DMControl.